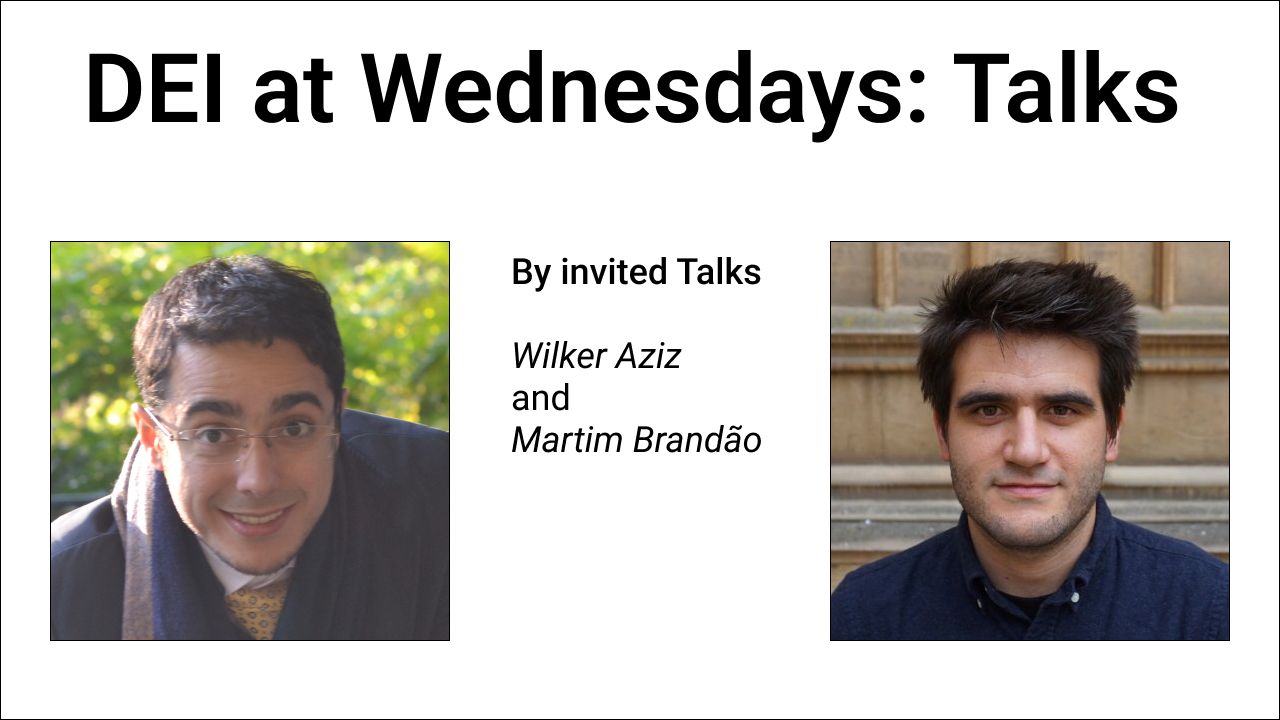

DEI at Wednesdays: Talks, 23-03-2022

Wilker Ferreira Aziz

Title: Learning to Represent Variation in Linguistic Data

Abstract: A defining feature of natural languages is variation: ideas can be expressed in different ways; different words mean the same; the same words mean differently. There's variation that's inherent to linguistic devices (morphology, syntax, discourse), there's variation due to background of the speaker, there's variation that's used to convey opinions and/or imbue one with emotions, there's variation due to seemly arbitrary confounders such as fatigue, concentration level, and boredom. Despite this unavoidable truth, many of the state of the art models for natural language processing cannot account for unobserved factors of variation, instead conflating such factors under a single hypothesis. In this talk I will discuss how to equip these models with statistical tools that promote an explicit account of variation and demonstrate a few applications aimed at different levels of linguistic variability (e.g. sentence-level, word-level, and subword-level). I'll close the talk with thoughts on the role of inductive biases such as multimodality and sparsity in the learning of disentangled factors of variation.

Bio: I am an Assistant Professor at the Institute for Logic, Language and Computation, University of Amsterdam. My research is concerned with learning to represent, understand, and generate linguistic data. Examples of specific problems I am interested in include language modelling, machine translation, syntactic parsing, textual entailment, text classification, and question answering. I also develop techniques to approach general machine learning problems such as probabilistic inference, gradient and density estimation. My interests sit at the intersection of disciplines such as statistics, machine learning, approximate inference, global optimisation, formal languages, and computational linguistics. I often contribute to the committees of major CL/NLP and ML venues (e.g., *ACL, EMNLP, Coling, NeurIPS, ICML, ICRL), including serving as area chair (e.g., ACL since 2020, ICLR since 2021). You can learn more about my research group from https://probabll.github.io.

Website: https://wilkeraziz.github.io

--

Martim Brandao

Title: Issues of fairness in concrete AI algorithms: person detectors, drowsiness estimators, motion planners

Abstract: Recently, much attention has been given to risks of AI in terms of bias, discrimination, transparency, accountability, etc. This has given rise to a plethora of proposals of general AI ethics principles, though few are practical enough to guide new system implementations. In this presentation I will describe practical issues of fairness in AI algorithms for robotics and other physical systems: person detectors, drowsiness estimators, and motion planners. I will try to convince you that there is a dimension of fairness attached to each of these algorithms, I will describe where it comes from, and I will propose how it can be potentially mitigated.

Bio: Martim is an incoming Assistant Professor in Robotics and Autonomous Systems at King's College London. His research focuses on planning, optimization, and vision algorithms for robotics - as well as issues of fairness, transparency and ethics in these algorithms. Previously he was a post-doc at King's College London, the University of Oxford, and Waseda University."

Website: https://www.martimbrandao.com/